Introduction

This page contains an overview of what we will cover in this course.

-

Of course from the course description, you can probably guess which viewpoint we will adopt. However, we would like to point out that both viewpoints are correct-- it depends on how you define what an "algorithm" is. More on this in a bit.

-

For the moment assume that we only care about images of cats or dogs (and nothing else).

-

In fact this could lead to the problem of

overfitting, which we will encounter later on in the course. -

Not because it is not important but just so that we can discuss various topics within the supervised learning framework just to make out lives a bit easier.

Under Construction

This page is still under construction. In particular, nothing here is final while this sign still remains here.

A Request

I know I am biased in favor of references that appear in the computer science literature. If you think I am missing a relevant reference (outside or even within CS), please email it to me.

Let's dive in, shall we?

We begin with a comment that Representative Alexandria Ocasio-Cortez (more commonly referred to as AOC, which is how we will refer to her for the rest of the document), made to Ta-Nehisi Coates during their chat at the 2019 MLK Now event where she says that algorithms can be biased:

This lead to some immediate backlash from certain quarters, e.g. here is one such reaction:

Socialist Rep. Alexandria Ocasio-Cortez (D-NY) claims that algorithms, which are driven by math, are racist pic.twitter.com/X2veVvAU1H

— Ryan Saavedra (@RealSaavedra) January 22, 2019

Before we go into the merits of the two viewpoints

Let's keep the discussions civil

A lot of discussions in this course will be based on opinions, which you might or might not agree. Please refrain from passing on personal comments when you disagree with someone. Please debate the idea and not the person/messenger.

Here is a video talking about the above principle in the context of talking about race:

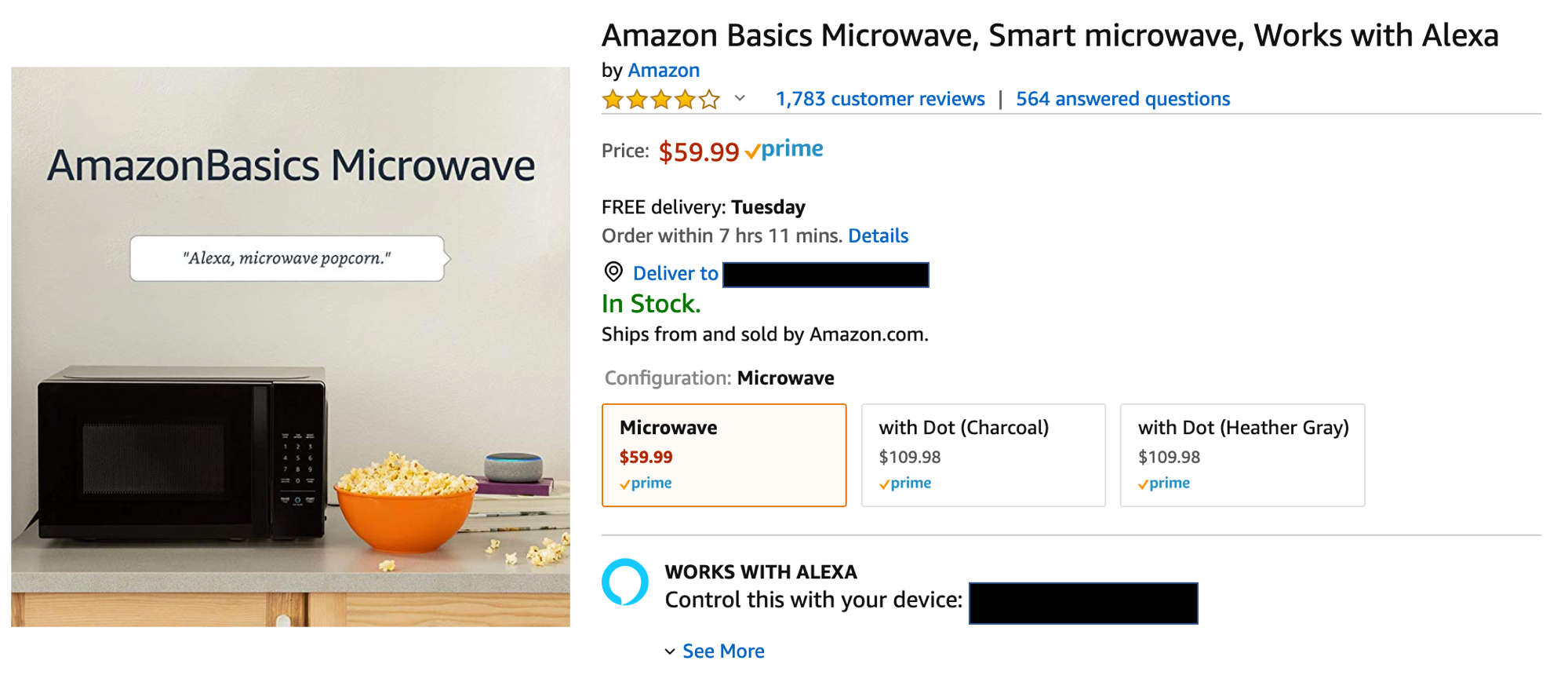

Just to give another example of what can happen when you "rush to judgment"-- sometime last year I was browsing Amazon and came across this page that Amazon was advertising on its homepage:

Click here to see why I was "jumping the gun"

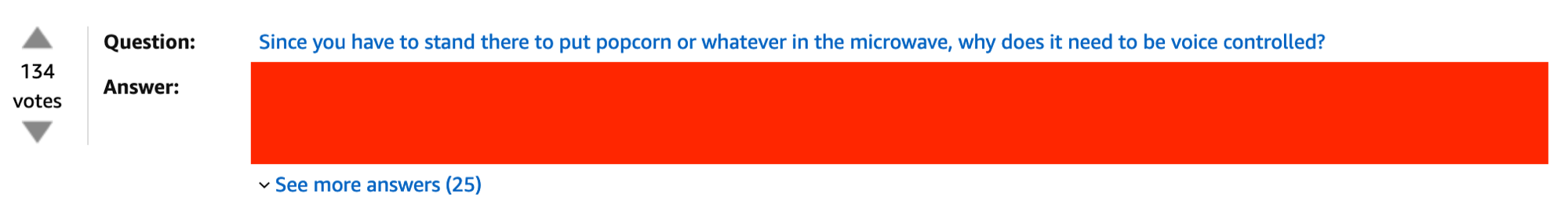

And the top answer was a great reminder to me that one should hold judgment and not rush to conclusions:

Back to Algorithms and Bias

Before we take any side on the "Algorithms can be biased" vs. "Math and hence algorithms cannot be biased", let us do some activities.1

Google Image Searches

In this activity, we will go Google Image search search the keywords ceo, heroine and beautiful woman.

Acknowledgments

Thanks to Jenny Korn for the first two search suggestions. The last search suggestion is from the show Atlanta (Warning!There is fair bit of profanity in this video):

Searching for ceo

Searching for ceo

Click on this link to search for ceo on Google images ? Do you see any pattern in the images in the result? Do you see certain demographics that are not as representative as others?

The link above did not work directly for me on all browsers. If this happens to you as well, go to Google Image search and then manually type in ceo in the search box

Click here to see results I got

My search results

Here is what I got with the above search:

Your search result might be a bit different but overall results should not be too different. E.g., the images are pre-dominantly of white male CEOs and e.g. female CEOs and black CEO images are less represented. E.g. in the first row of image result, the first two images of female CEOs (among the "headshots" of CEOs) are not real CEOs. It's only in the 10th picture do we get a real female CEO: Barbara Humpton, CEO of Siemens USA

Questions to ponder

Do you think the above result are biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think Google Images is responsible?

- Should Google Images do something to fix the bias?

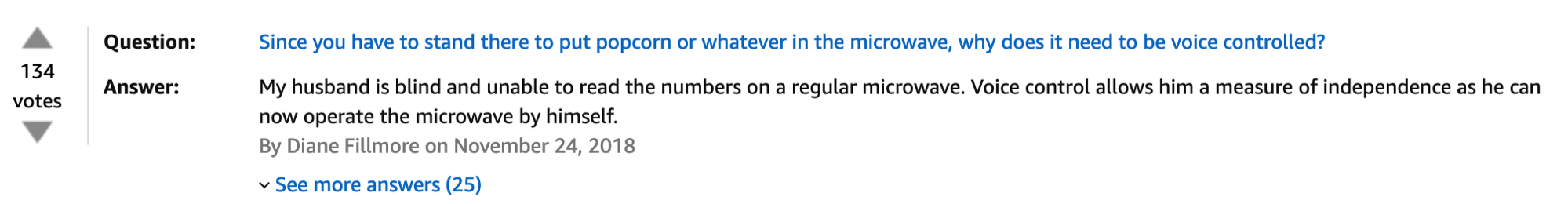

Searching for heroine

Searching for heroine

Click on this link to search for heroine on Google images ? Do you see any pattern in the images in the result? Do you see certain demographics that are not as representative as others?

The link above did not work directly for me on all browsers. If this happens to you as well, go to Google Image search and then manually type in heroine in the search box

Click here to see results I got

My search results

Here is what I got with the above search:

Your search result might be a bit different but overall results should not be too different. In the above search result, all of the returned images are those of South-Asian women. For comparison here is the link to search for hero on Google images .

Questions to ponder

Do you think the above result are biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think Google Images is responsible?

- Should Google Images do something to fix the bias?

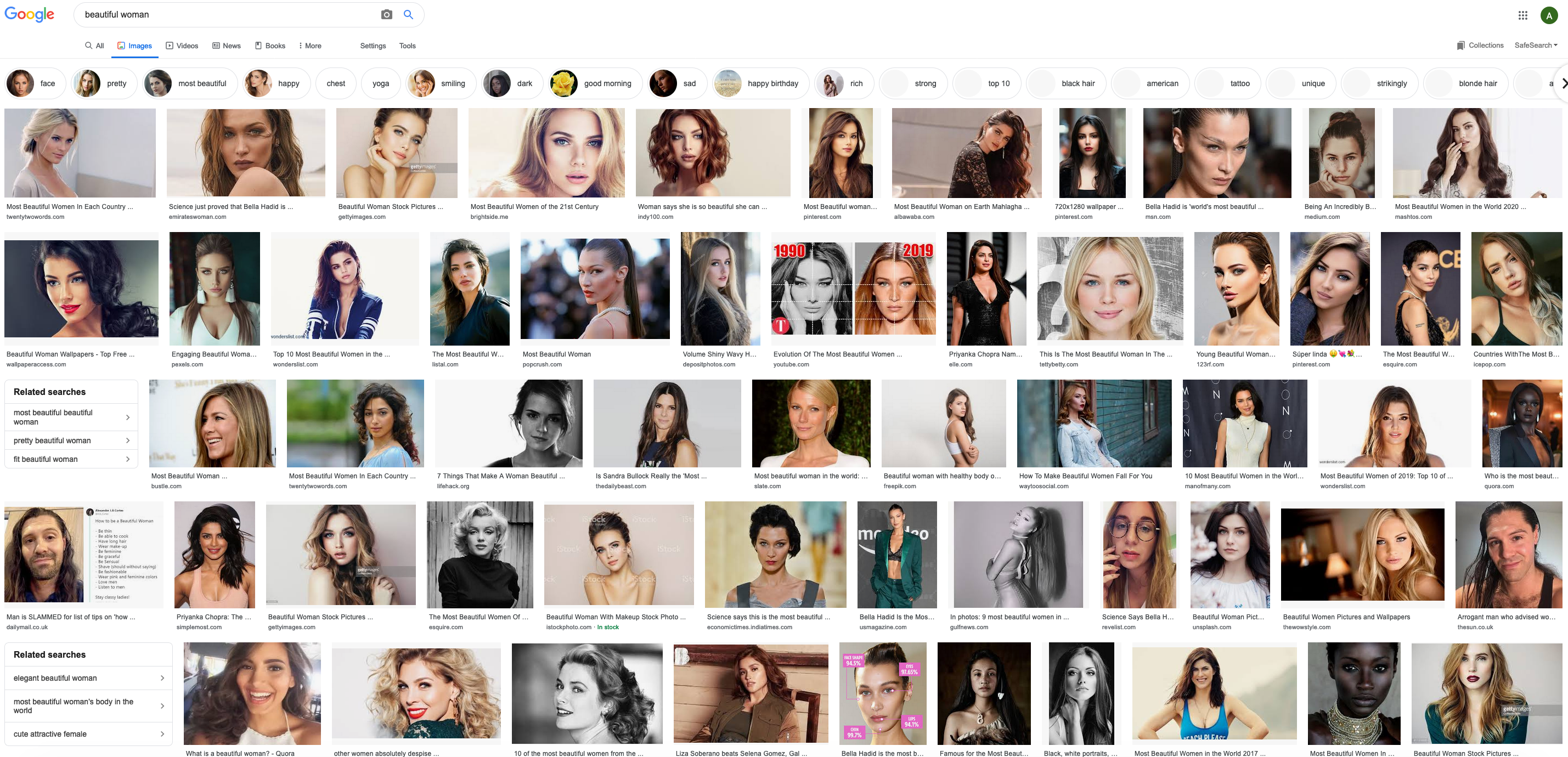

Searching for beautiful woman

Searching for beautiful woman

Click on this link to search for beautiful woman on Google images ? Do you see any pattern in the images in the result? Do you see certain demographics that are not as representative as others?

The link above did not work directly for me on all browsers. If this happens to you as well, go to Google Image search and then manually type in beautiful woman in the search box

Click here to see results I got

My search results

Here is what I got with the above search:

Your search result might be a bit different but overall results should not be too different. In the above search result, pretty much all of the returned images are of white women. In the fact the first black woman in the search above is just before a white man with long hair. Also there are very few Asian women (some south Asian women and noeast Asian women.

Questions to ponder

Do you think the above result are biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think Google Images is responsible?

- Should Google Images do something to fix the bias?

Before we go on

US centric view

As you might have noticed from the above examples they are all focused on the US. This is going to be on-going theme: in particular, when we talk about "society" in class we will be referring to societal issues in the US (e.g. when we talk about algorithms and law we will exclusively talk about US law).

This is not because other countries/societies are not important (and indeed some of the pitfalls we will see in this course comes precisely because of the US-centric view of many of the software developed): it's just that since we are in the US when we talk about societal issues it is easier to relate them to say recent issues in the US (and hence more relatable to the students in the course).

Further, many of the concepts that we talk about will be applicable anywhere in the world but the motivating examples will be (mostly) from the US.

We do acknowledge that this choice is somewhat of a choice of convenience and so wanted to state it up front for that y'all have this at the back of your mind as we go along in the course (and I'll try and remind y'all of this later on in the course as well).

Google Translate

In this activity, we will use Google Translate to translate some (carefully chosen) sentences from English to a language that does use gender (in this specific case Bengali) and back and will observe some interesting switches.

Acknowledgments

This activity is based on a 2017 Science paper by Caliskan, Bryson and Narayanan.

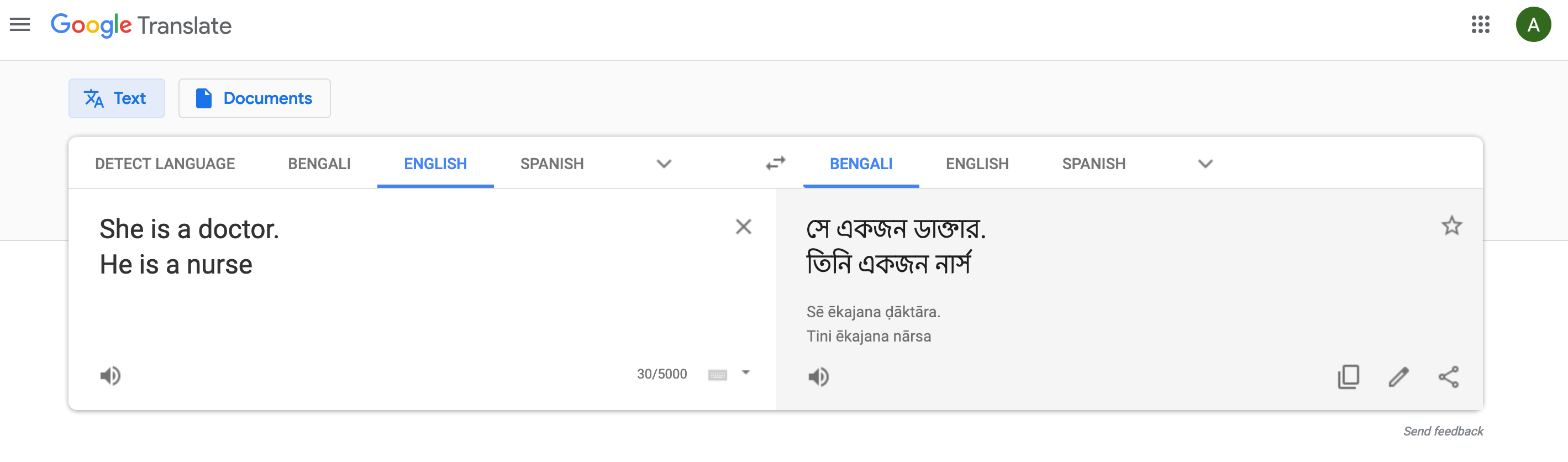

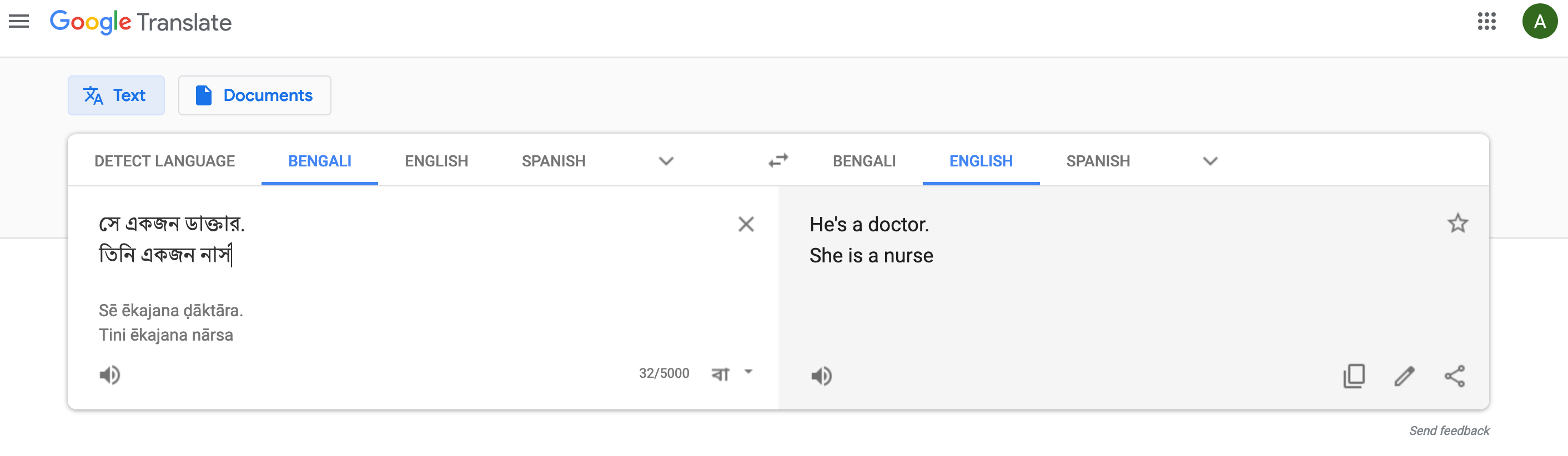

The doctor and nurse switch

The doctor and nurse switch

Go to Google translate . Pick the language on the left to be English and pick the language if the right box to be Bengali. Enter the following text in the left box:

She is a doctor He is a nurse

You should get a result along the following lines:

Now click on the "swap" icon ( ). What result do you get?

). What result do you get?

This activity could be done by replacing Bengali with a language that does not gender specific words. However, your results might vary (e.g. in this example using Turkish has "half the effect").

Click here to see results I got

My search results

Here is what I got with the above swap:

You should have gotten a result like the above. Note that the genders of the doctor and nurse got swapped!

Questions to ponder

Do you think the above result are biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think Google Translate is responsible?

- Should Google Translate do something to fix the bias?

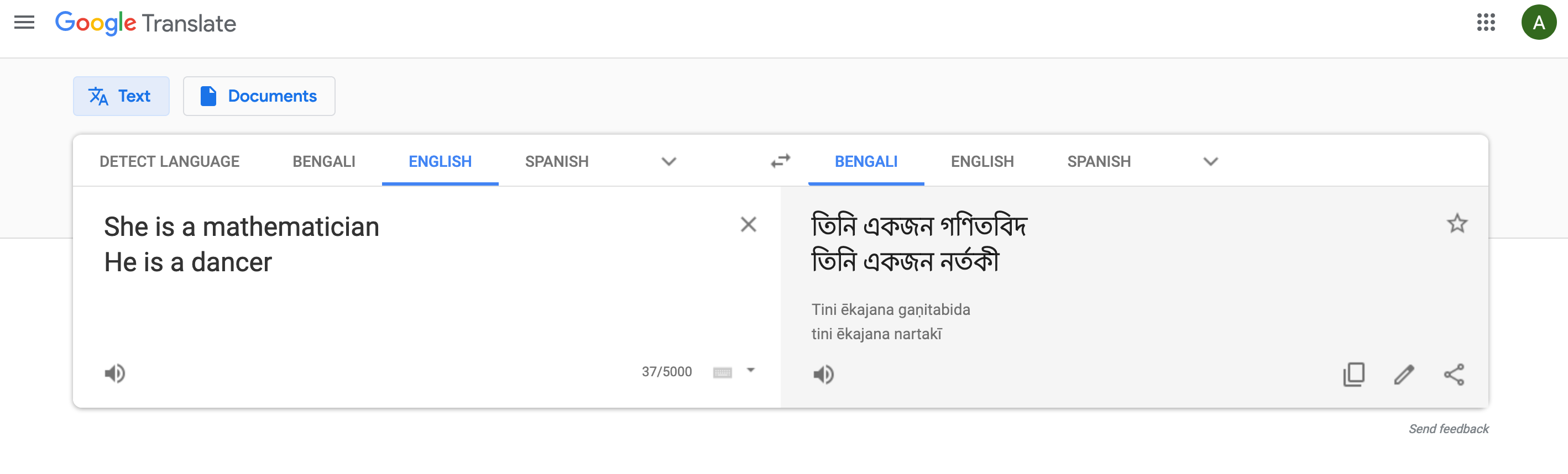

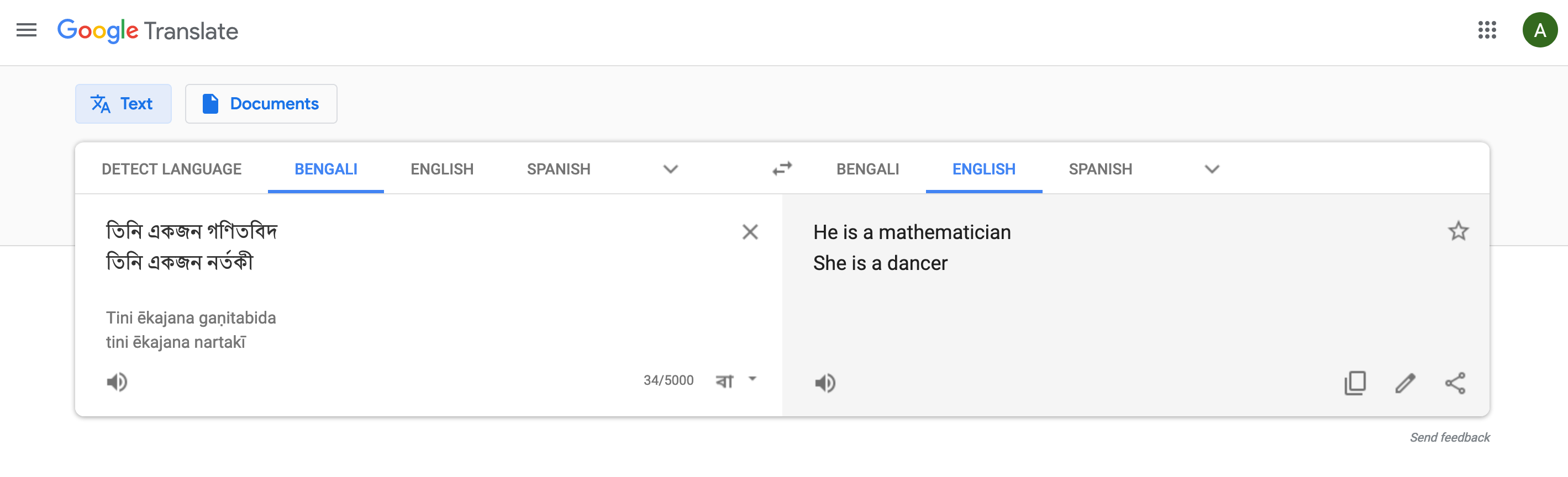

The mathematician and dancer switch

The mathematician and dancer switch

Go to Google translate . Pick the language on the left to be English and pick the language if the right box to be Bengali. Enter the following text in the left box:

She is a mathematician He is a dancer

You should get a result along the following lines:

Now click on the "swap" icon ( ). What result do you get?

). What result do you get?

This activity could be done by replacing Bengali with a language that does not gender specific words. However, you mileage might vary (e.g. using Turkish shows the full effect for this activity).

Click here to see results I got

My search results

Here is what I got with the above swap:

You should have gotten a result like the above. Note that the genders of the mathematician and dancer got swapped!

Questions to ponder

Do you think the above result are biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think Google Translate is responsible?

- Should Google Translate do something to fix the bias?

What's up with this Google bashing?

Why Google is being singled out?

In case you are wondering why we are only focusing only on Google and not on other platforms, e.g. Facebook ?

The main reason is that anyone can use Google irrespective of whether they have a Google account or not, while the same is not true in Facebook (I personally do not have a Facebook account and hence did not run any Facebook related activities).

There are lots of other systems where results might not be as one might expect but as mentioned above, running such activities means one must have the corresponding platform/software installed/signed on. Next, we will see a video of such an experiment on face recognition systems.

Face Recognition

Acknowledgments

The video below is based on a 2018 FAT* paper by Buolamwini and Gebru.

Questions to ponder

Do you think the above video shows that the results of the face recognition systems were biased?

If not, why not?

If so,

- What do you think could be the reason for this bias?

- Do you think the face recognition software are responsible?

- Should the companies do something to fix the bias?

Why does representation bias matter?

Some of the examples above (e.g. the Google images search for beautiful woman) are examples of representational harms that Kate Crawford outlined in her NIPS 2017 keynote talk:

We will come back to the talk above later in the course, but here is a great video showing what representational harm can lead to:

Is AOC correct then?

Based on the examples above it would seem that algorithms can be biased. However, in the spirit of being open to other ideas, is it possible that under some interpretation, "algorithms are based on math and hence cannot be biased" actually makes sense? It turns out that this interpretation might have some validity in the traditional definition of an "algorithm."

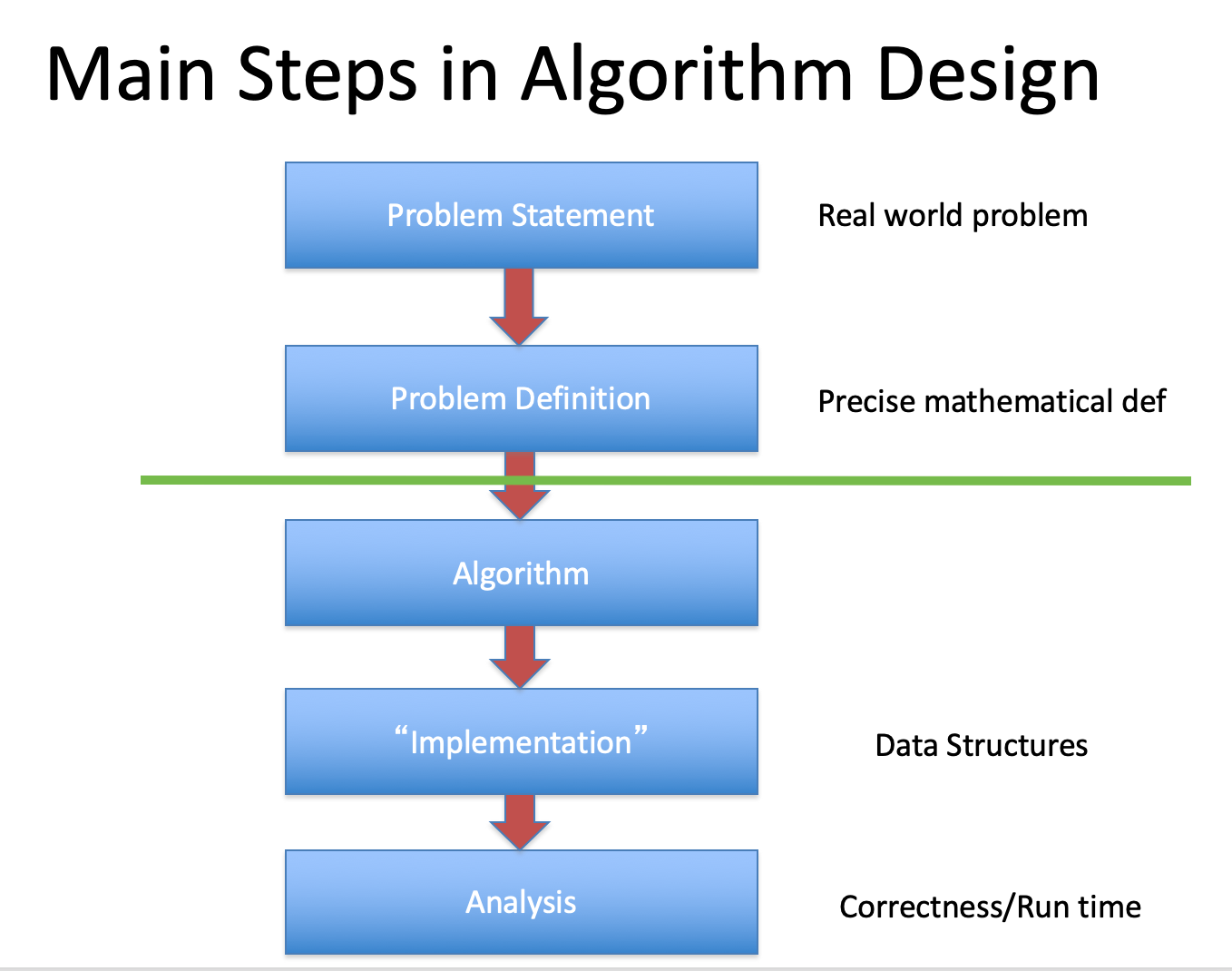

Traditional definition of an algorithm

Here is how Don Knuth defines an algorithm:

An algorithm is a finite, definitive, effective procedure with some input and some output.

Independence of the algorithm from the problem

As you might have noticed, the definition above does not talk about the relationship between the inputs and outputs. In other words, the definition of an algorithm is independent of the problem it is trying to solve.

Of course we design algorithms to solve specific problems, so in traditional study of algorithms we talk about the correctness of the algorithm when stating whether a given algorithms solves a given problem. However, even then traditionally, there is a clear separation between the problem statement and the algorithm used to solve the problem. For example, here is the flowchart that I still used in my UG algorithms course at UB :

The specific terms in the flowchart are not that important

It is fine if you do not understand all the terms in the flowchart above (e.g. if you do not know what "Data Structure" means that is perfectly fine). The only thing matters is the separation of problem definition and algorithms design (which we will get back to very shortly).

Since this is more relevant, we will now elaborate a bit on what we mean by Problem definition. A problem definition is (typically a mathematical definition) that clearly states the format of the input, the format of the output and precisely defines how each input gets mapped to an output.

In particular, everything above the green line is related to defining the problem and everything below the green line is related to algorithm design. In particular we assume that the problem is mathematically precisely defined before we start designing the algorithm to solve the said problem. Additionally, we say an algorithm is correct if and only if on ALL possible valid inputs, the algorithm output exactly the output that is mathematically required by the problem statement.

Traditional Algorithms could be claimed to be not biased

Under the strict requirements for algorithm design as we traditionally have used above it is technically possible to argue that algorithms cannot be biased. However, as we will see shortly, the traditional definition of an algorithm no longer is valid.

The modern algorithm

Let us start with a fairly benign problem: i.e. one of determining if an image is that of a cat or dog?

Cat vs. Dog?

Try this for few minutes: Come up with a mathematically precise definition of how given an image we can classify them as either cat or dog.

In particular, when your definition gets as its input a picture of Billy:

then it should output

cat and if you feed it a picture of Warren

dog.

The cats vs. dog is a "solved" problem

While you spend the rest of the semester trying to come up with a mathematically precise definition of when an image has a cat vs. when an image has a dog, let us see how existing platform already solves this problem. In fact, we will use the "reverse image search" capability of Google Images to try and identify the object in Billy and Warren's images.

Reverse Image search for Billy and Warren

Go to Google Images and then perform a reverse image search for Billy and Warren. In particular, click on the "camera" icon (![]() ). Choose the Paste Image URL option using this link for

). Choose the Paste Image URL option using this link for Billy and this link for Warren

My search results

Here is what I got when searching with Billy's image:

and here is what I got when searching with Warren's image:

Your search result might be a bit different but overall results should not be too different. In the above search result, Billy is correctly identified as a domesticated short-haired cat (and hence a cat) and Warren is correctly identified as a pit bull (and hence a dog).

So did Google Images solve the cat vs. dog problem?

Acknowledgments

This activity is based one of the experiments done in the 2019 CSCW paper by Ali, Sapiezynski, Bogen, Korolova, Mislove and Rieke.

I suspect there is an earlier paper that should be credited for this. If you have a better reference, please email me.

Not so fast....

Let us repeat the above exercise but will this modified picture of Billy:

and this picture of

Warren

The actually the above pictures are the same as before I fiddled with the image settings. Human eyes should still be able to recognize a cat and a dog respectively.

Here are the detailed instructions. Go to Google Images and then perform a reverse image search for Billy and Warren. In particular, click on the "camera" icon (![]() ). Choose the Paste Image URL option using this link for modified picture of

). Choose the Paste Image URL option using this link for modified picture of Billy and this link for modified picture of Warren

Click here to see results I got

My search results

Let us start with the modified picture of Warren:

Warren has suddenly changed from pit bull to companion dog! Of course one could argue that he is still classified as a dog. Let us now see how the modified version of Billy fared:

Billy is now classified as a fish!

Your results should be similar. If you get some other results, please let me know!

Bonus point opportunity!

Can you change Warren's original image so that (1) it is still recognizable as a dog but Google Images classifies the modified images as something other than a dog?

The best submission (email me a copy or link to your modified image of Warren) will be given five (5) bonus points! Note that if for the best submission Google Image still recognizes the modified Warren picture as (some kind of) a dog, then no one gets any points :-)

What is going on?

Well, that was a bit weird. How did Google Images get thrown off by changes in pictures that we as humans seem not to be bothered by when deciding between cats and dogs. We will talk about the technical details in later lectures but for now, let us give a quick very high level overview of how the Google Images algorithm works and try to make some sense of the odd behavior that we just saw.

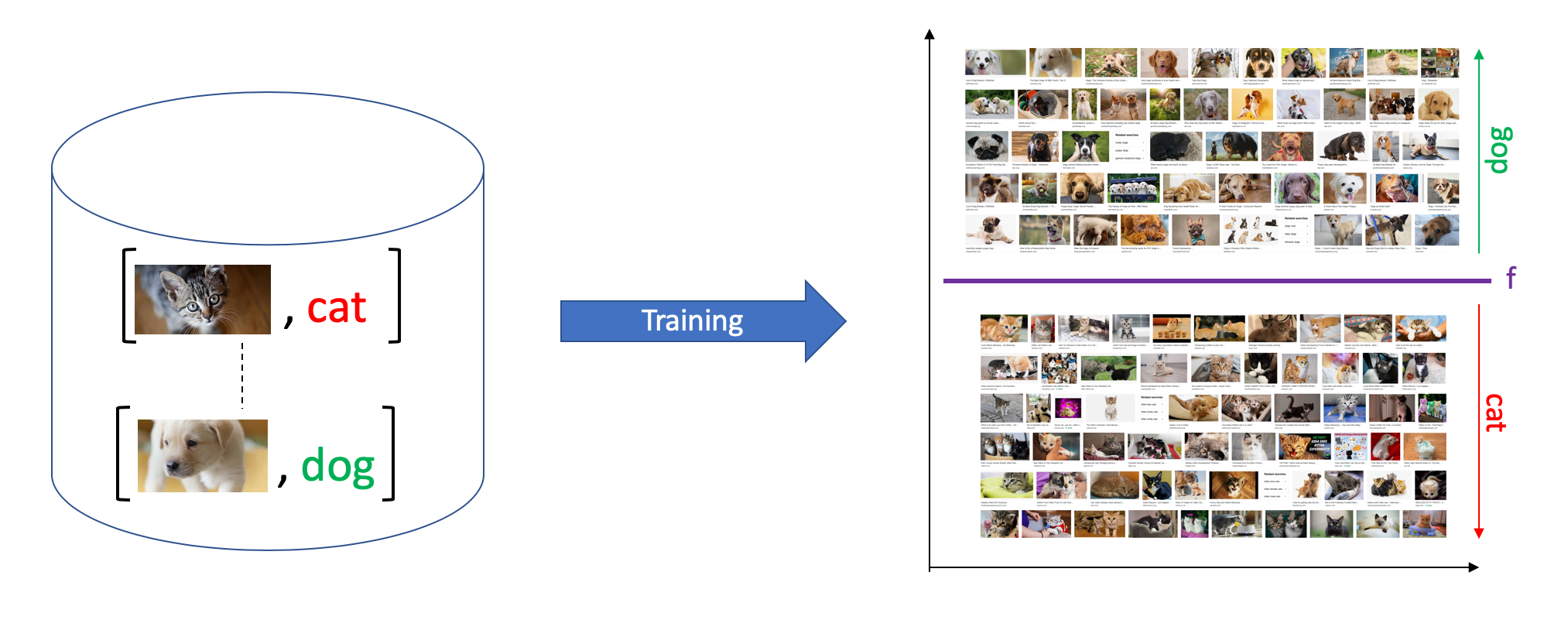

Supervised Learning

In this course, we will focus on supervised learning . In the context of classifying images as cats or dogs 2, the algorithm works as follows:

- In the training phase, the algorithm takes in (a lot!) of labeled examples: i.e. it takes in a lot of images of cats and dogs, each of which is labeled

catordog(in the figure below this is represented as a pair $[$image,label$]$). Then the system tries to "fit" a function $f$ with the labeled input. In other words, the function $f$ when given one of the training images outputs the correct label ofcatordog(or more accurately does this will as little error as possible). Here is a super simplified cartoon of this process (below on the right the learned function $f$ is a linear function that perfectly separatescatsanddogs-- such perfect separation almost never happens in real life though!3):

What is a model?

We will frequently use the word model, which in the above description is nothing but the function $f$ that distinguishes pictures of cats and dogs. Of course, it is a very interesting question on how one might get hold of such a "magical" model $f$-- we will punt on this for a few lectures and will talk briefly about it later.

- In the inference phase, the system uses the same function $f$ that it learned in the training phase on a new image $I$ and outputs the label $f(I)$. Continuing the cartoon description of the training phase above, let us assume that we fed the $f$ generated above the image of

warren, then the situation might be as follows (warrengets mapped close to apit bulland hence falls on the "dogside" of $f$, leading to him being classified as adog):

As one can imagine, unsupervised learning is also a thing, but we will not consider it in this course. 4

The problem-algorithm divide is no more

Irrespective of your preference for cats vs. dogs, the main takeaway from the discussion above is that in the modern algorithmic framework of supervised learning, there is no longer a clear distinction between problem description and algorithm design as we had for traditional algorithms. Indeed, in this setup, we are defining the problem as well as designing the algorithm at the same time!.

Another take

See Medium article on the modern algorithm by Suresh Venkatasubramanian for a related take on how the modern algorithm paradigm is different from the traditional notion of algorithms. Suresh also talks about the traditional analogy between recipes and algorithms (which I did not make) and situates everything in the context of recipe for sambar .

Further, as illustrated in the above example, the function $f$ being learned from the labeled examples need not be the function we want the system to learn. For example, the Google Image classification for cats and dogs was not quite doing that (see the confusion between the modified picture of billy and fish). We will see multiple times in this course that this can be big problem. In other words, we have that:

Coming back to AOC

It is definitely possible that an algorithm trained on biased data will learn a biased function $f$ leading to biased outcomes. In this sense AOC was right!

Rest of the course

In the rest of the course, we will expand upon the above observations and do a more deep dive into why AOC was right in her comments at the top of this page. Roughly speaking, the course will cover the following:

- We will go bit deeper into how supervised learning systems work. In particular, we will use Hal Duame III's blog post on the machine learning pipeline to talk about various stages in the modern algorithm pipeline.

- We will then consider notions of bias, discrimination and fairness. In particular, we will consider some technical solutions to these issues.

- We will then see that technical solutions by themselves are not enough and we have to consider the entire machine learning pipeline and its interactions with society. We will also learn how to give up on a "silver bullet" solution and learn more about the how technology is not the center of everything :-) Among others, we will consider interactions of machine learning and the law, how socio-technical systems could be a way forward to reason about algorithms and society.